Frederik Ebert

I earned my PhD in Computer Science from UC Berkeley in May 2022, where I was advised by Prof. Sergey Levine and Prof. Chelsea Finn (Stanford CS department). My research at the Berkeley Artificial Intelligence Laboratory (BAIR) focused on data-driven robotic manipulation, emphasizing generalizable learning across tasks, environments, and embodiments using large-scale data, deep generative models, imitation learning, reinforcement learning, tactile sensing, and hierarchical control.

After graduating, I founded Emancro, where I led the development of learning-based robotic systems in real-world hospital settings, gaining first-hand insight into the limitations of current robotic foundation models under distribution shift, long-horizon tasks, and reliability constraints.

I am broadly interested in developing scalable robotic foundation models and world-model-based control systems that leverage diverse, multimodal interaction data to enable fast, reliable, and robust manipulation in complex real-world environments.

Publications

|

Pre-Training for Robots: Offline RL Enables Learning New Tasks from a Handful of Trials This paper introduces Pre-Training for Robots (PTR), an offline reinforcement learning framework that leverages large, diverse robot datasets and as few as 10 task-specific demonstrations to rapidly adapt to new tasks in unseen environments as well as allowing on-line RL fine-tuning in the real world. |

|

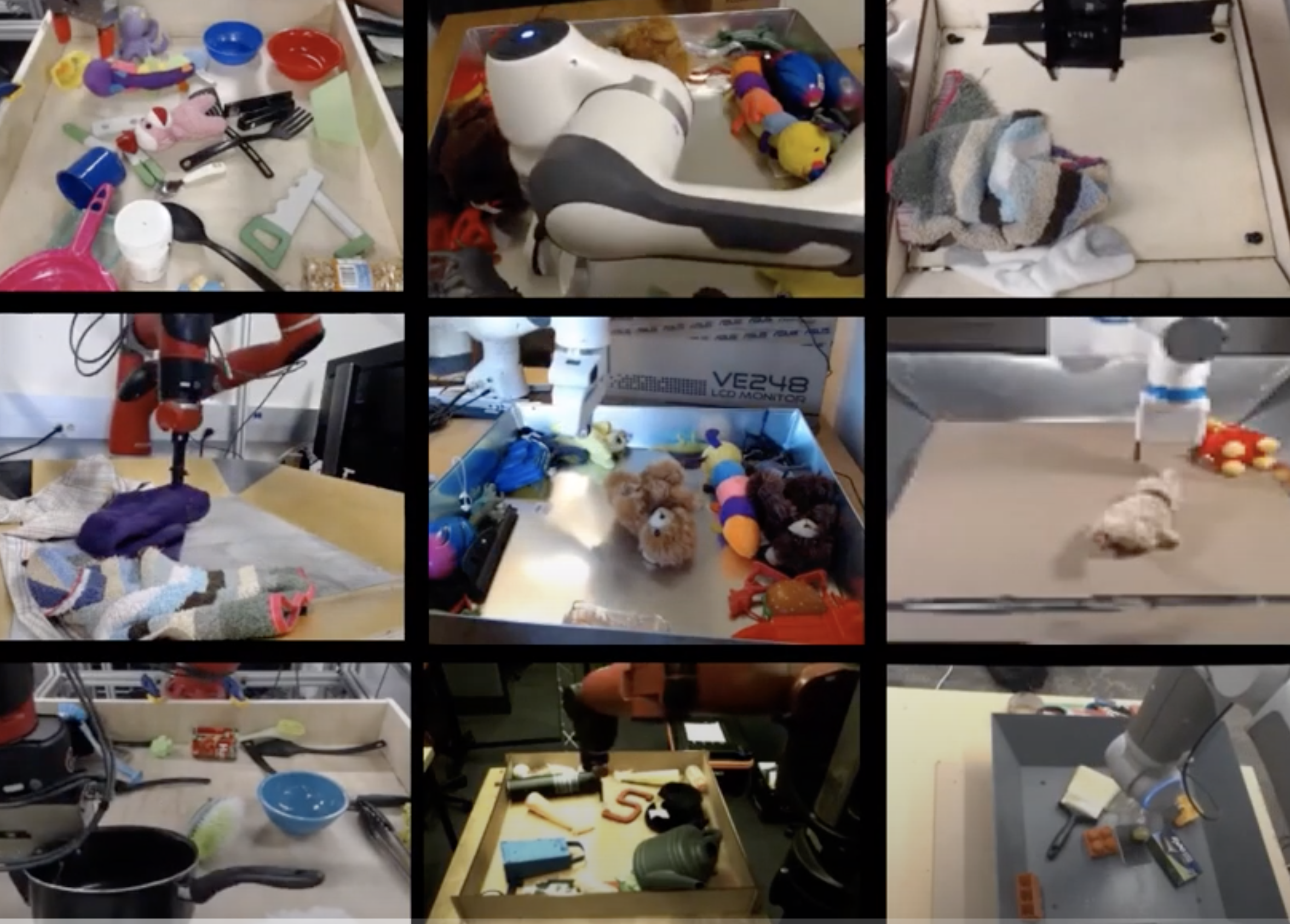

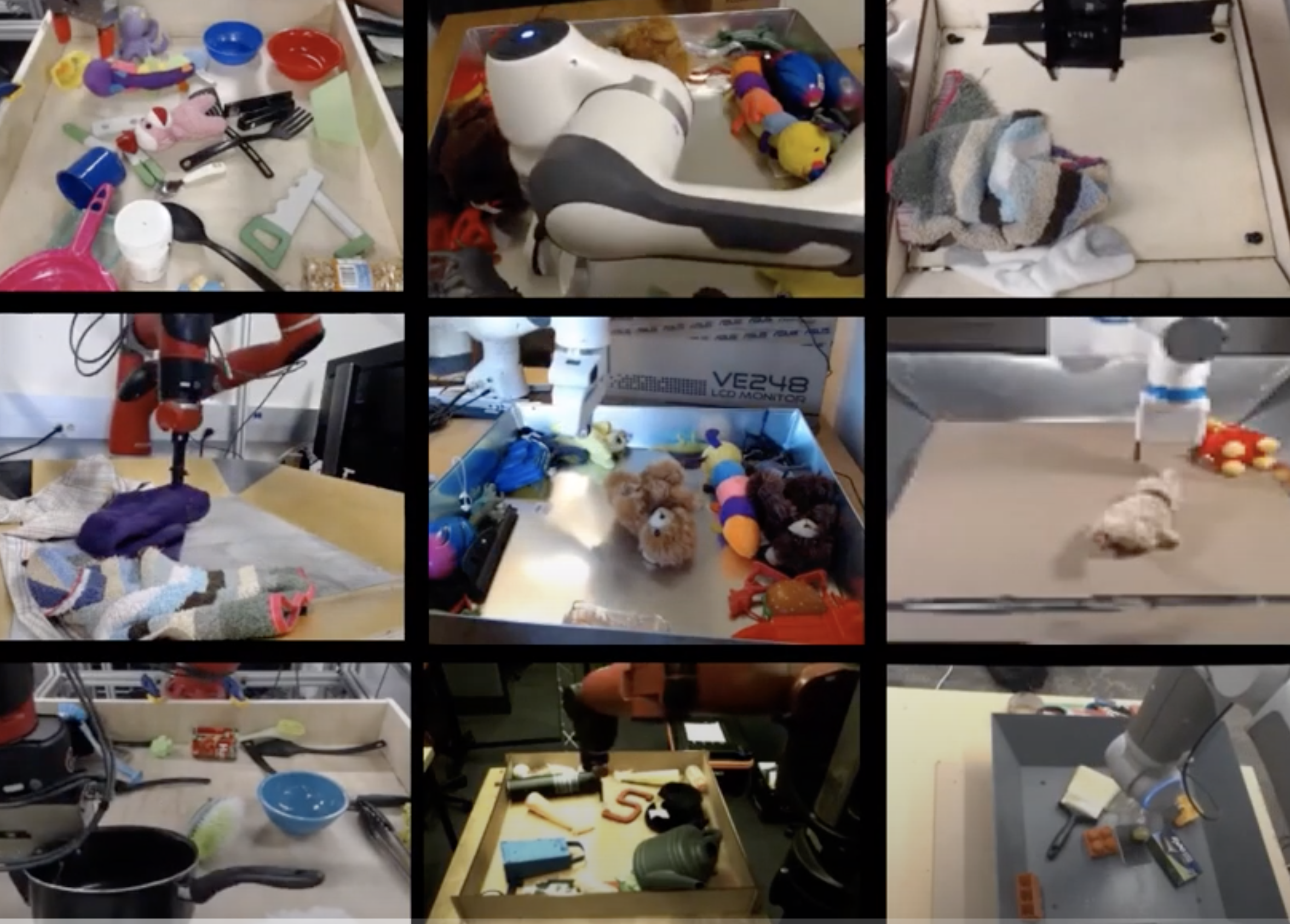

Bridge Data: Boosting Generalization of Robotic Skills with Cross-Domain Dataset We collected a large multi-task, multi-domain demonstration dataset, which we call the Bridge Dataset consisting of more than 7300 demonstrations, of 73 different tasks in 10 different toy-kitchen environments. Training on this data produces signifcantly more generalizeable policies and allows transferring tasks from the bridge data to a target domain. |

|

Long-Horizon Visual Planning with Goal-Conditioned Hierarchical Predictors We propose a hierarchical prediction model that predicts sequences by recursive infilling. We use this model to devise a hierarchical planning approach that allows to scale visual MPC to long-horizon tasks with hundreds of time steps. |

|

OmniTact: A Multi-Directional High Resolution Touch Sensor OmniTact is a novel high-resolution multi-directional tactile sensor based on the Gelsight sensor. We show that the omnidirectional sensing capabilities allow inserting an eletrical connector purely based on the sense of touch. |

|

RoboNet: Large-Scale Multi-Robot Learning RoboNet is a large-scale database for sharing robotic experience across different robots for learning generalizable skills. RoboNet contains datafrom 7 different robot platforms and allows transferring skill and dynamics models between different robots. |

|

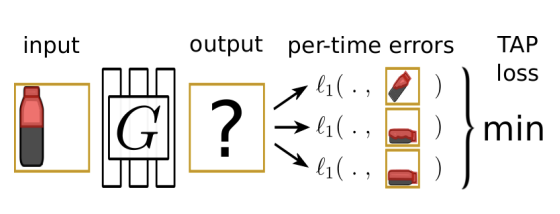

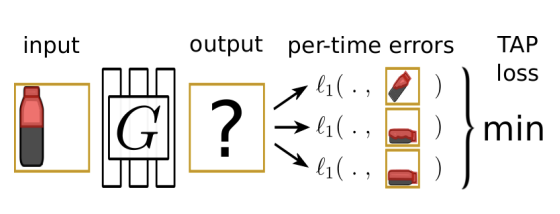

Time-Agnostic Prediction: Predicting Predictable Video Frames Time agnostic prediction (TAP) is a method for predicting intermediate images in between a start frame and a goal frame for the purpose of planning. Instead of predicting at fixed time-intervals the optimizer chooses the optimal time-step. |

|

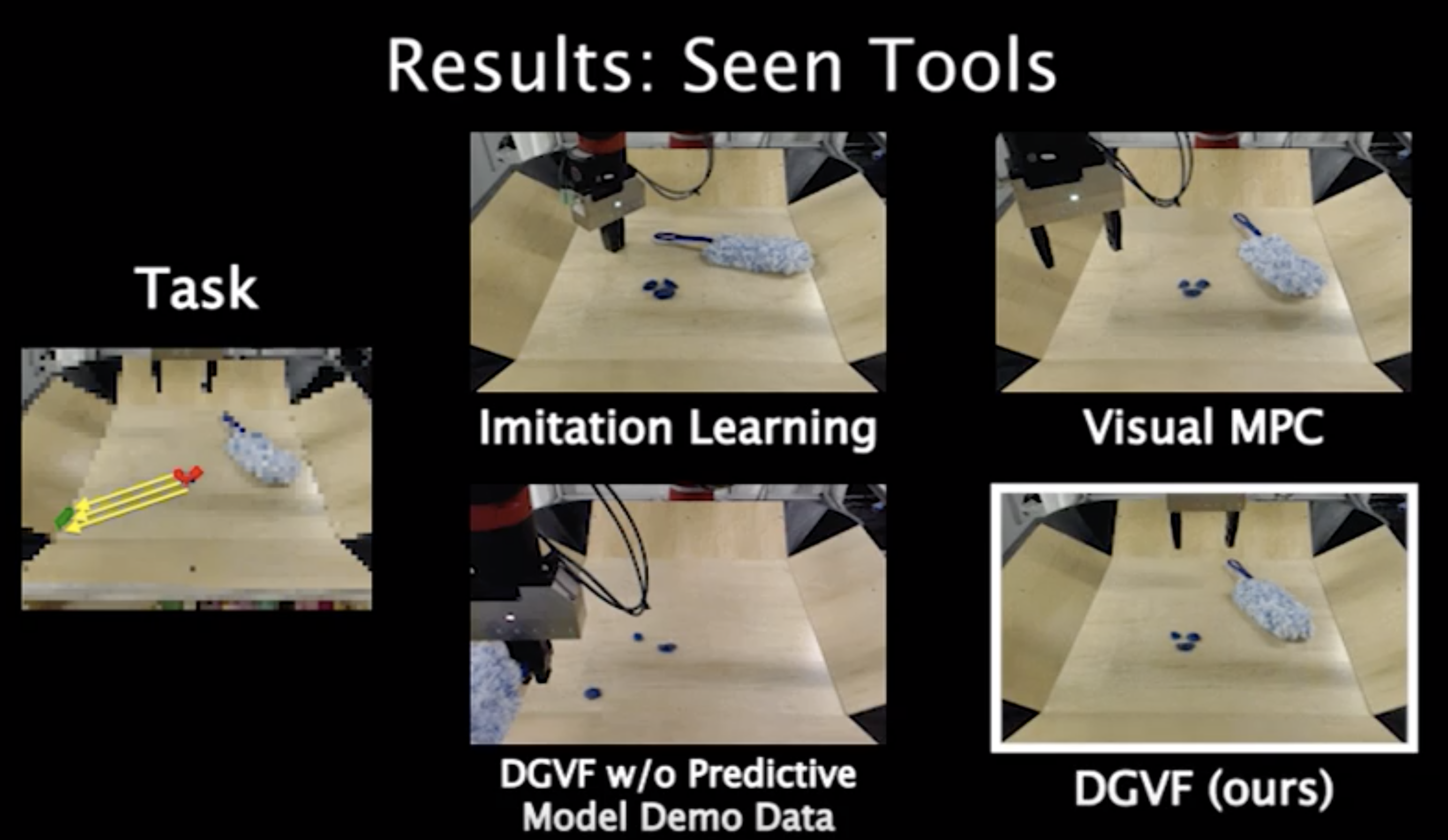

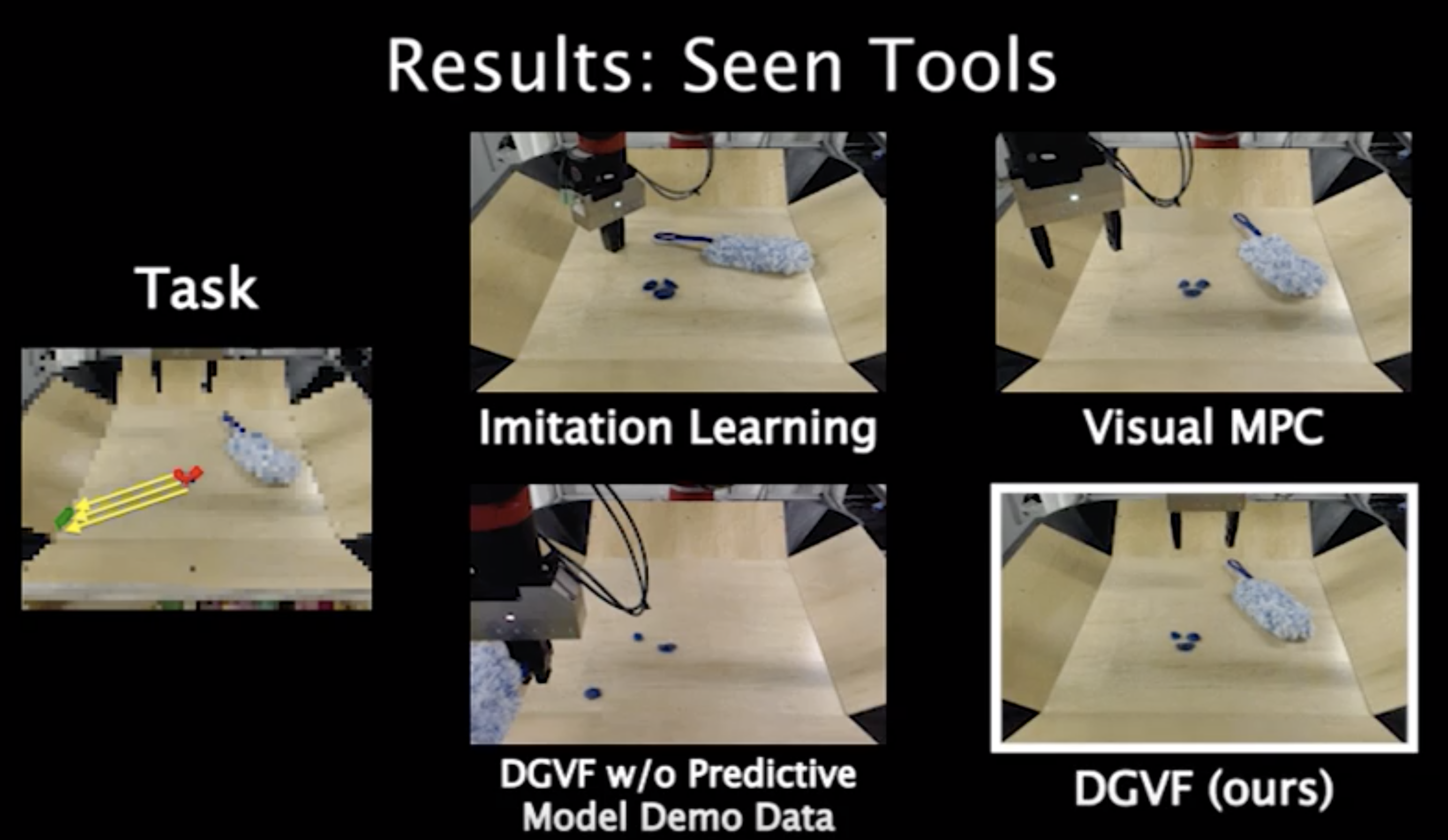

Improvisation through Physical Understanding: Using Novel Objects as Tools with Visual Foresight We combine diverse demonstration data with self-supervised interaction data, aiming to leverage the interaction data to build generalizable models and the demonstration data to guide the model-based RL planner to solve complex tasks. |

|

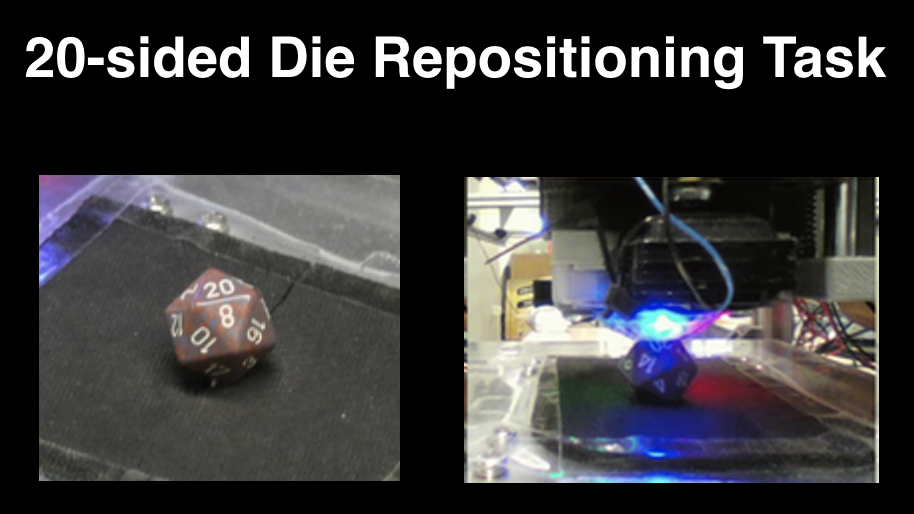

Manipulation by Feel: Touch-Based Control with Deep Predictive Models We propose deep tactile MPC, a framework for learning to perform tactile servoing from raw tactile sensor inputs, without manual supervision. We show that this method enables a robot equipped with a GelSight-style tactile sensor to manipulate a ball and 20-sided die |

|

Robustness via Retrying: Closed-Loop Robotic Manipulation with Self-Supervised Learning To enable a robot to continuously retry a task, we devise a self-supervised algorithm for learning image registration, which can keep track of objects of interest for the duration of the trial. We demonstrate that this idea can be combined with a video-prediction based controller to enable complex behaviors to be learned from scratch using only raw visual inputs, including grasping, repositioning objects, and non-prehensile manipulation. |

|

Self-Supervised Visual Planning with Temporal Skip Connections We present three simple improvements to self-supervised visual foresight algorithm that lead to substantially better visual planning capabilities. Our method can perform tasks that require longer-term planning and involve multiple objects. |

© 2020 Frederik Ebert